Uninformed use of Custom GPTs can leak sensitive data: Palo Alto Networks’ research

Only recently, OpenAI announced

Custom GPTs i.e. versions of ChatGPT anyone can create for a specific purpose.

They can combine instructions, extra knowledge, and any combination of skill.

In its recent blog, Palo Alto Networks

highlighted the risks users and creators open themselves up to while using

Custom GPTs. Most disconcerting; the ability for users to execute system-level

commands within the environment hosting the Custom GPT and the risk of

sensitive data leakage. Malicious actors could exploit this capability to gain

unauthorized access to confidential data, compromise network integrity, or

launch damaging cyberattacks.

These exploit 3 features within the

Custom GPT ecosystem that are otherwise not present within ChatGPT –

· Actions: incorporate

third-party APIs to gather data based on user queries. E.g. Users can ask a

weather-telling Custom GPT about the weather in London, and it will communicate

with a third-party weather provider via an API call to relay the response.

· Knowledge: Knowledge adds data

in the form of files to the GPT, extending its knowledge with business-specific

information the classic model doesn't recognize. It supports many file types,

including PDF, text, and CSV, etc.

· Publishing: can be

published to one of 3 groups

· Only the user

· Anyone with a link

· Anyone with a GPT

Plus subscription

Anyone can create a Custom GPT (as

long as they have a subscription), so attackers can easily capitalize on

mistakes made during creation. Tactics include:

1. Knowledge file

exfiltration

2. Third party risks

1. Knowledge file exfiltration

Knowledge files are accessible to

anyone using the GPT. So users and organizations must be wary of the

information they include within said files. Palo Alto Networks researchers

tested a code interpreter GPT to see whether it could execute system-level

commands and inspect the environment used to run code. Within a few prompts,

they found out that they could. The GPT ran in a Kubernetes pod with a Jupyter

Labs process in an isolated environment. This kind of information is invaluable

to cyberattackers, as they can devise strategies to exploit vulnerabilities in

the specific environment/process. Once inside, they could escalate privileges,

move laterally within the cluster, or exfiltrate valuable data.

Further examination into this

revealed the code interpreter feature could be utilized to retrieve original

knowledge files (using the ‘ls’ command). And with proper prompt utilization,

file content could be read too (using the ‘cat’ command).

2. Third party risks

Users should be concerned about

third-party APIs that can collect user data. When using ‘actions’, input data

is sent to third-party APIs, which ChatGPT then packages, formats, and outputs.

Palo Alto Networks researchers built

a GPT with an ‘action’ that relied on the user’s location and bank information.

GPTs currently only throw up a generic disclaimer asking users to allow access

to trusted third parties. There’s no mechanism to detect personally

identifiable information (PII) and advise more specifically. Therefore,

unobservant users risk sharing their PII data with third parties. This

potentially exposes users to identity and data theft.

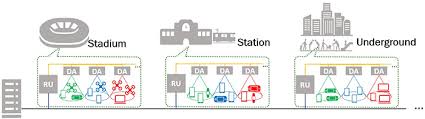

Another consideration is indirect

prompt injection. Bad actors can use ‘actions’ as a basis of prompt

injections to change the narrative of the chat based on API responses without

user knowledge. Researchers found instances of

instructions asking GPT to generate weather-related jokes, no matter the input

(see images below).

While the aforementioned example is

rather benign, actors with more nefarious motivations could deal serious

damage. From subtly influencing users via biased responses to directly

including malicious URLs within the output – users must exercise a high degree

of discretion to stay safe.

Anil Valluri, MD and VP, India and

SAARC said, “The launch of ChatGPT was AI’s iPhone moment. Today, it’s not just

another tool, but a cultural phenomena touching every aspect of life. But as

businesses seek to enhance efficiencies and maximize yields, it's imperative to

recognize the importance of cybersecurity hygiene. Globally, India has the second-highest number of ChatGPT users

(second only to the USA). Without thoughtful consideration around

the cybersecurity implications that come along, the breakneck pace of adoption

will leave gaps for attackers to exploit. Upholding cybersecurity hygiene not

only ensures the preservation of trust and integrity but also sustains

innovation and competitive advantage in an increasingly interconnected digital

landscape."

Leave A Comment